Beyond Hardware: Building the Software Foundation for Heterogeneous GPU

As GPU vendors supporting heterogeneous architectures continue to multiply, their software and hardware ecosystems remain fragmented—effectively forming isolated “silos.” Rather than forcing tight hardware-level co-execution (“hard coordination”), the path forward lies in software-level interoperability: empowering flexible deployment and efficient scheduling of heterogeneous inference workloads through a universal, vendor-agnostic communication library.

Do We Need Heterogeneous GPU Communication?

The number of GPU vendors supporting heterogeneous architectures is growing rapidly. Yet their software and hardware ecosystems remain largely fragmented—effectively forming isolated “silos.” While differences in underlying hardware architectures contribute to this fragmentation, the more fundamental cause lies in how each vendor builds a closed, vertically integrated software stack around its own hardware. This includes highly customized compute libraries (e.g., CUDA, ROCm, NeuWare, MUSA), communication libraries (e.g., NCCL, UCC, HCCL), and vendor-specific adaptations of mainstream training and inference frameworks such as Megatron-LM and vLLM.

Notably, even the same framework often spawns multiple incompatible variants across vendors or use cases—such as large-scale distributed training versus high-throughput inference—with divergent APIs, dependencies, and runtime behaviors. This version fragmentation further impedes cross-platform interoperability and collaboration.

Before deciding whether to invest in cross-vendor GPU communication capabilities, we must first address a foundational question: Do large-scale AI workloads—such as training and inference for large language models—truly require collaborative computation across GPUs from different vendors? Two prevailing viewpoints exist:

- Proponents argue that geopolitical constraints (e.g., U.S. export controls on high-end GPUs) and supply chain resilience concerns have already forced many data centers into mixed-GPU deployments. In such environments, enabling heterogeneous GPU collaboration—whether for training or inference—could help unlock otherwise idle compute resources.

- Skeptics, however, point out that state-of-the-art AI clusters are typically built around a single vendor—and often a single GPU model—to fully exploit co-optimized hardware and software features like high-speed interconnects, unified memory models, and tuned communication libraries. Introducing GPUs from different vendors or generations inevitably triggers a “weakest-link” bottleneck, degrading overall throughput and increasing scheduling complexity. In training—where tight synchronization and high-bandwidth collective communication are critical—the practical gains from heterogeneity often fall far short of expectations.

Indeed, several Chinese institutions—including Biren Technology, Beijing Academy of Artificial Intelligence (BAAI), and China Mobile Research Institute—have demonstrated prototype heterogeneous training systems that combine domestic GPUs with NVIDIA accelerators for large model pretraining. Nevertheless, full-cycle pretraining remains poorly suited to heterogeneous GPU setups.

The core issue lies in the nature of pretraining itself: it tightly couples forward and backward passes and relies heavily on frequent, high-volume gradient synchronization across data, tensor, and pipeline parallelism strategies. When participating devices differ in compute capability, memory bandwidth, or network throughput, the system inevitably suffers from pipeline bubbles, communication stalls, and load imbalance. Even with sophisticated task partitioning or dynamic scheduling, heterogeneous clusters struggle to match the efficiency of homogeneous ones.

Publicly reported results confirm this: the best current heterogeneous solutions only approach—not match or exceed—the performance of homogeneous baselines. Moreover, large-scale deployment faces severe hardware compatibility hurdles: disparities in PCIe generations, support for GPUDirect RDMA, intra-node GPU memory sharing mechanisms (e.g., IPC), and the presence (or absence) of NVLink-like ultra-fast interconnects all constrain scalability and stability.

In contrast, the dominant workload in real-world data centers is inference, not training. Large model inference exhibits fundamentally different characteristics:

- Inference typically runs quantized or compressed models, with significantly lower computational demands than training. Depending on model size, quantization scheme, and parallelization strategy, inference often fits on just one or a few GPUs within a single node. Tensor parallelism is generally confined to intra-node execution, as cross-node deployment incurs prohibitive communication overhead.

- Inference involves only the forward pass—no backpropagation—dramatically reducing communication frequency. Modern designs like Mixture-of-Experts (MoE) or Prefill/Decode (PD) separation primarily require point-to-point transfers (e.g., KV cache movement) or All-to-All/AllGather operations (for expert routing or intermediate state exchange). Only tensor parallelism necessitates AllReduce.

- Most importantly, inference is request-driven. A scheduler can naturally assign incoming requests to different nodes, achieving request-level data parallelism without requiring fine-grained device coordination.

Together, these traits make inference inherently fine-grained, loosely coupled, and unidirectional in data flow—with minimal reliance on large-scale, tightly synchronized hardware. This aligns exceptionally well with heterogeneous cluster environments.

Thus, the key challenge in heterogeneous inference is not “Can GPUs compute together?” but rather: How can we efficiently route inference requests to the most suitable GPU type at the cloud orchestration layer to maximize global throughput? And how can we enable fast, low-latency migration of model weights, KV caches, and other state between disparate devices?

In summary, we argue that tightly coupled heterogeneous collaboration is neither necessary nor practical for large-model pretraining—its engineering complexity and performance penalties far outweigh potential benefits.

However, the inference landscape is fundamentally different. As heterogeneous GPU deployments become commonplace, efficiently integrating multi-vendor accelerators to serve diverse inference workloads is now critical for improving resource utilization and service elasticity. This does not require fine-grained co-execution across GPUs; instead, it demands:

- Unified scheduling of heterogeneous hardware resources;

- Rapid data movement of models and KV caches across device boundaries.

To enable this vision, we urgently need a universal, high-performance, vendor-neutral communication library that seamlessly supports point-to-point and All-to-All operations (e.g., KV cache exchange, expert routing) across all GPU types—while abstracting away differences in drivers, memory models, and interconnect architectures.

This—not forced training co-execution—is the true infrastructure priority for the heterogeneous AI era.

Why Build a Heterogeneous GPU Communication Library?

Current high-performance communication libraries largely lack support for direct data transfer between GPUs from different vendors. Libraries like NCCL, while offering point-to-point (P2P) capabilities, are deeply coupled to vendor-specific software stacks—most notably CUDA—and cannot be easily extended to other GPU architectures.

On the other hand, general-purpose frameworks such as UCX aim for hardware neutrality but are constrained by legacy design choices that prevent direct GPU-to-GPU memory transfers across heterogeneous devices. As a result, inter-vendor GPU communication today must fall back to CPU memory staging: data is first copied from the source GPU’s VRAM to host memory over PCIe, then transferred again from host memory to the destination GPU’s VRAM. This “GPU → CPU → GPU” path introduces significant latency and repeatedly consumes scarce PCIe bandwidth—severely degrading throughput, especially in high-concurrency inference or frequent KV cache exchange scenarios.

Compounding this issue is the fragmented landscape of interconnect technologies. Each GPU vendor has developed its own high-speed link: NVIDIA offers NVLink, GPUDirect RDMA, and P2P memory access; Cambricon has MLU-Link; Moore Threads introduced MTLink; and industry consortia are promoting standards like UALink. These solutions differ widely in protocol, topology, bandwidth, and software interfaces—and remain mutually incompatible.

If every application or scheduler must implement custom logic for each interconnect type, development complexity becomes unsustainable. Hence, there is a pressing need for a unified communication abstraction layer that hides the heterogeneity of underlying hardware interconnects and exposes a consistent, reliable, and high-performance data transfer interface to upper-layer systems. This is not merely an engineering convenience—it is the foundational enabler for resource pooling in heterogeneous inference clusters.

What We Built: HMC – A Communication Library for Heterogeneous GPU

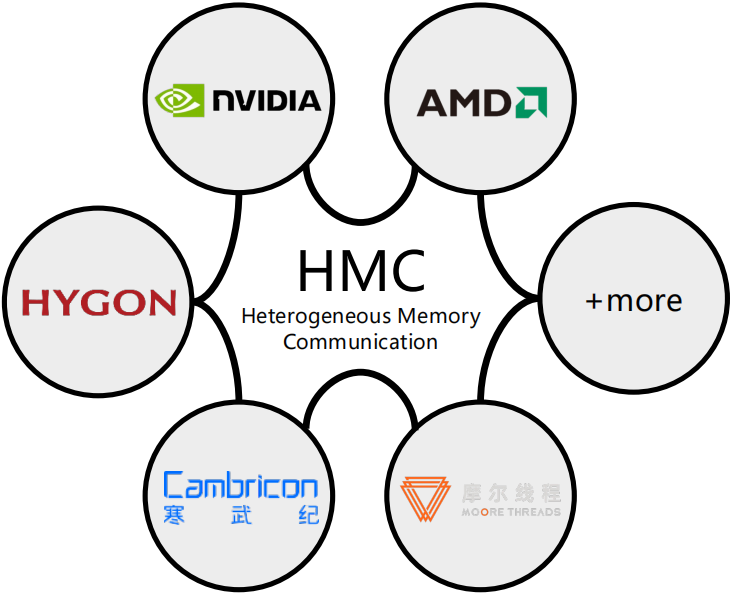

We have developed HMC (Heterogeneous Memory Communication), a high-performance communication library designed specifically for heterogeneous computing environments. Its mission is clear: enable efficient and reliable data exchange between GPUs from different vendors—without CPU staging and without lock-in to any single vendor’s software stack.

Rather than replacing NCCL or UCX, HMC operates at a higher abstraction layer. It unifies diverse hardware backends and interconnect protocols under a single, clean API. Whether your cluster mixes NVIDIA, AMD, Hygon, Cambricon, or Moore Threads accelerators, HMC provides consistent semantics for memory allocation, data copying, and point-to-point transfers.

HMC delivers four key capabilities that form the foundation of efficient heterogeneous communication:

Unified Memory Abstraction. Applications no longer need to manage vendor-specific runtimes (e.g., CUDA, ROCm, MUSA, CNRT). With HMC’s Memory interface, they can allocate and copy data across devices using a single, device-agnostic API.

Direct GPUtoGPU Data Paths. Leveraging registered buffers (ConnBuffer) and a unified transport layer (supporting heterogeneous RDMA direct-write, UCX, etc.), HMC dynamically negotiates the optimal communication path between peer GPUs—enabling direct VRAM-to-VRAM transfers and eliminating unnecessary PCIe round-trips through host memory.

Native Support for Heterogeneous Topologies. HMC allows applications to construct fine-grained, cross-vendor GPU topologies at the card level. On such topologies, it natively supports heterogeneous All-to-All operations—without requiring artificial homogeneity constraints or shim layers.

Flexible Control and Integration. A lightweight control plane (over TCP or Unix Domain Sockets) handles synchronization and small-message coordination. Dual Python and C++ APIs enable rapid integration into mainstream inference frameworks like vLLM or custom orchestration systems.

HMC has been validated in real-world heterogeneous environments and fully supports NVIDIA, AMD, Hygon, Cambricon, and Moore Threads accelerators. It demonstrates stable, high-throughput data movement across CPU-GPU, homogeneous GPU, and cross-vendor heterogeneous GPU configurations.

As an initial end-to-end validation, we integrated HMC into vLLM with minimal modifications and successfully ran pipeline-parallel inference on a mixed NVIDIA–AMD GPU cluster—proving that heterogeneous large-model serving is feasible and practical.

Our vision is simple yet ambitious: Every GPU, regardless of vendor, should be efficiently schedulable, flexibly composable, and seamlessly interoperable in inference workloads.

HMC is the communication cornerstone that makes this open, collaborative, and high-performance heterogeneous inference ecosystem possible.

Roadmap

Support Native Interconnects from Domestic GPU Vendors

HMC currently lacks full support for vendor-specific high-speed interconnects such as Cambricon’s MLU-Link and Moore Threads’ MTLink. Due to limited public APIs for low-level integration, we plan to bridge this gap by integrating vendors’ private collective libraries (e.g., HCCL, MThreads Collective) as optimized backends for homogeneous communication within the same device family. This hybrid approach allows HMC to deliver peak performance on native hardware while maintaining cross-vendor interoperability at the system level.

Advance All-to-All Capabilities & Deep Framework Integration

We will enhance HMC’s All-to-All algorithms and integrate them into leading inference frameworks like vLLM and SGLang, enabling advanced deployment strategies such as Expert Parallelism (EP) and efficient cross-device KV cache transfer in heterogeneous settings.

However, a major obstacle remains: fragmented software support from domestic GPU vendors. For example:

- Hygon provides only a vLLM 0.6.x port;

- Cambricon has updated to vLLM 0.9.x;

- Moore Threads offers no official support—even for its previous flagship S3000.

This inconsistency in framework maintenance creates a “version maze” that severely hinders real-world adoption of heterogeneous inference.

Hardware innovation is necessary—but not sufficient. What truly attracts and retains developers is a stable, user-friendly, and continuously evolving software stack. That is the bedrock of any thriving ecosystem.

Expand GPU Support Through Open Collaboration

Today’s domestic GPU ecosystem remains relatively closed, with uneven support across drivers, runtimes, and toolchains. We sincerely invite GPU vendors to join us in co-building an open, interoperable future—whether through:

- Public collaboration on driver/runtime compatibility,

- Provisioning of actively maintained hardware for testing,

- Or sharing of up-to-date, production-ready software releases.

Only through hardware-software co-design and community-driven openness can we unlock the full potential of heterogeneous computing—not just in benchmarks, but in real production systems.

I’d greatly appreciate any feedback or insights you’d be willing to share.